Approximate Ambient Occlusion

Posted in Development by brechtSome well known animation studios are now using approximate global illumination in feature films: Pixar used ambient occlusion in Ratatouille, Dreamworks used single bounce indirect lighting in Shrek 2, ILM used ambient occlusion in Pirates of the Carribean 2 & 3. So I looked for an ambient occlusion method that was reasonably simple to implement, and still fast enough to handle the high poly scenes we are dealing with.

Not considering leaves and fur (those are much too noisy to efficiently compute standard ambient occlusion with), our scenes will still have millions of polygons. Using raytraced ambient occlusion as in Blender now would take a long time to render, especially since for animation the result must be noise free, which would require a large number of samples. Instead I worked on an alternative method based approximating all surfaces as disks from NVidia (Dynamic Ambient Occlusion and Indirect Lighting [pdf]), which was used by ILM to deal with very high poly meshes in Pirates of the Carribean 2 & 3, and is also available in PRMan as “point based occlusion”. An advantage of this approach is that it is inherently noise free, though it is not accurate and it does suffer from other artifacts.

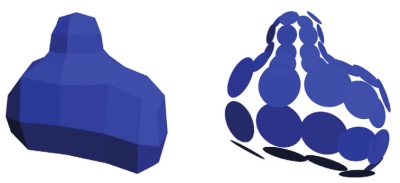

Surface approximated by disks.

The basic idea is quite simple: treat each vertex as a disk, and compute how much each point is occluded by summing together for all the disks how much they occlude that point. Going over all disks in a scene to compute occlusion at one point would be quite slow, so we can cluster together disks into bigger disks, and as we get further from the point we are shading, we use those bigger disks instead. Another thing is that regions can be shadowed too much, since you’re not taking into account if one disk is behind another, shadowing from the same direction twice. In practice it still works quite well even with this approximation, though multiple passes can be used to reduce overshadowing.

In my experience this basic method works fairly well, but it still gave a number of artifacts. Part of those could be reduced by increasing the accuracy when traversing disks in the scene, though that made things quite a bit slower, and still didn’t get rid of some artifacts. Fortunately, a recent method published in the GPU Gems 3 book (chapter High Quality Ambient Occlusion, sorry, no link), solved many of these problems by treating nearby triangles as triangles directly, instead of disks. My implementation uses part of the code as provided by the authors (Jared Hoberock, Yuntao Jia) with the book, thanks!

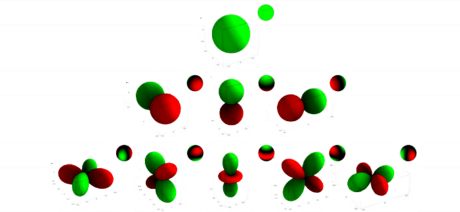

Spherical harmonics up to the 2nd order, as used in our implementation.

However, traversal of the scene still required fairly high accuracy, because of the way bigger disks are created from smaller disks. If two disks point in quite different directions, the averaged disk can give quite different results than using the two disks individually. The first solution I tried for this was to cluster disk together not only by position, but also by normal. While this worked well to get rid of artifacts, this resulted in traversal time that was perhaps slower than necessary, since disks were not clustered together spatially that well. The second approach I tried now approximates the sum of disks with spherical harmonics, as used in PRMan and explained in the Point-Based Graphics book, chapter 8.4 (sorry, again no direct link). This means we don’t have to cluster by normal anymore, though in the end it seems not much faster, but it does seem to avoid some artifacts at lower accuracy.

So, starting with disks used for everything, finally the implementation uses no disks directly anymore, but triangles and spherical harmonics approximations of disks. Also not that the way this works is similar to the way subsurface scattering works in Blender, with the extra difficulty here that the occlusion is directional, rather than uniformly distributed with a quick falloff. Both approximate far away surfaces by assuming the contribution of many small elements can be replaced by one big element, which in physics is known as the superposition principle. In this case it only holds approximately, but in computer graphics we are allowed to cheat.

Further work that is need is making this work efficiently for fur. The standard trick appears to be to compute ambient occlusion at the surfaces, and use that on the strands. However, computing it for all of the strands in the scene (which can be more than 10 million), is probably still not efficient enough, so a method to extrapolate ambient occlusion results over multiple strands is needed. Another thing is that ambient occlusion could be sped up by some form of irradiance caching (this is also true for the raytraced ambient occlusion), though there is probably not enough time to do a full implementation of that. Instead I’m trying a simple screen space method to upsample the results from fewer pixels.

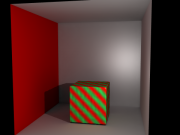

Here are some simple results with Suzanne, on an Intel Core2Duo 3.0Ghz with 2 threads:

Further work could also make this method usable for a single bounce of indirect lighting, though I don’t think we will be able to use that for Peach. A quick prototype implementation showed that this method could indeed support it fairly well, but performance it is likely not fast enough for Peach.

Currently this new ambient occlusion is not in SVN yet, so you will have to wait a bit to test it, but it will be there soon.

January 11th, 2008 at 6:15 pm

brecht rocks! ooooh yeah!

January 11th, 2008 at 6:22 pm

cool!

January 11th, 2008 at 6:29 pm

awesome!!!

January 11th, 2008 at 6:31 pm

very interesting! and excellent results :D

January 11th, 2008 at 6:42 pm

This is great! Cant wait for it to be released :D

January 11th, 2008 at 7:11 pm

Great Brecht!

January 11th, 2008 at 7:47 pm

droooool

January 11th, 2008 at 8:11 pm

B(lende)RMan!!!

January 11th, 2008 at 8:40 pm

Wow, I’m impressed! Can’t wait for 2.50!!

January 11th, 2008 at 9:17 pm

Looks awesome . keep it going

January 11th, 2008 at 9:57 pm

brecht is the best. I start rendering my animation in February, and was trying to bake AO on the whole set. Now, I may not have to.

Giant-size w00t!

January 11th, 2008 at 11:17 pm

great work!!! very impressed!! Thanks Brecht

January 11th, 2008 at 11:40 pm

It’s excellent :-). Thank you very much, Brecht !

January 11th, 2008 at 11:41 pm

It’s excellent. Thank you very much, Brecht !

January 12th, 2008 at 12:15 am

It’s simply amazing, did this with an old patch

http://www.zanqdo.com/tmp/FastGI.mov

10 seconds per frame

January 12th, 2008 at 12:37 am

that is some of the best news i have heard all day

January 12th, 2008 at 12:56 am

[Quote]Brecht rocks![Quote]

January 12th, 2008 at 1:21 am

Sweeeeet! Can’t wait to test it…

January 12th, 2008 at 1:48 am

Truely Great work brecht, i normally don’t like to use AO, for the reasons you said, but with this i would have no quams in using ao :)

January 12th, 2008 at 4:58 am

Hi Brecht, this is great stuff. Since you’re storing the occlusion points as SH, would it be possible eevntually to do stuff like the fake blurry reflections mentioned here? Or is it a bit different… http://mentalraytips.blogspot.com/2006/12/stuff-you-never-even-knew-about-mental.html

January 12th, 2008 at 5:57 am

[quote]The basic idea is quite simple: treat each vertex as a disk, and compute how much each point is occluded by summing together for all the disks how much they occlude that point.[/quote]

what do you mean with point? the vertex? or the pixel that gets shaded?

and what discs get summed together? all discs in the scene which get seen from the current “point”?

sounds very useful though (even if i don’t understand what it exactly does), especially the single bounce thing at the end. :)

January 12th, 2008 at 6:18 am

Matt, they seem to be using SH in a different way. I’m using it to approximate areas of disks in the occluding points.

What they seem to do is while gathering, instead of computing a single value, compute an SH function at that point that gives values in all directions. That’s actually quite a nice trick, and I think it could be implemented for this method as well.

My idea for bump maps and normals maps was to not use them for AO, or at least provide an option to disable them. If I have the time, this might be an interesting solution.

horace, the ‘point’ in that sentence refers to the point in space (and actually a normal as well) at which the occlusion is being computed, e.g. in most cases the pixels. The occlusion from all disks in the scene is summed together, the method doesn’t care if they are seen or not (which is the cause of the overshadowing).

January 12th, 2008 at 6:29 am

Hey Brecht!…you make us Crazy!!!

this is really Amazing!

Thank you so much.

January 12th, 2008 at 1:27 pm

that’s indeed a really great news.

and the single bounce option gives so much depth to blender’s internal engine! I really hope you’ll make it available in the SVN even if it won’t be used in peach.

have you looked at Instant GI specifications also?

http://graphics.uni-ulm.de/Singularity.pdf

January 12th, 2008 at 1:29 pm

just a quick note: the InstantGI is one of Sunflow’s global illumination methods

http://sfwiki.geneome.net/index.php5?title=GI#Instant_GI

January 12th, 2008 at 1:51 pm

As I understand it, IGI can flicker across frames in an animation with moving objects, since the distribution of the point lights might be different each time. I haven’t see a good solution to that yet.

January 12th, 2008 at 1:56 pm

i think you’re right, so much time spent on static architectural images made me forget the flickering issue!

January 12th, 2008 at 7:11 pm

Brecht – you’re like a bundle of gifts!

That is VERY impressive results, especially the one with the upsampling. Reducing time like this – AND EVEN making it LOOK BETTER – is like exactly what most of us needed. I rarely use AO at work simply because it gives too much noise – and 32 x sampling is so slow that it’s virtually useless even with a Quad processor.

Nice going – I really hope we get to taste your improvements asap. as it will really *no ..REALLY* improve what we do here at work.

/JoOngle

January 12th, 2008 at 10:41 pm

“Wait… p orbitals?” was my first reaction to the second image.

Spherical harmonics apparently have a lot of applications.

January 13th, 2008 at 5:19 pm

Hi, here is just an interesting link given from Alfred (from the french blender forum “zoo”) : http://www.debevec.org/HDRI2004/landis-S2002-course16-prodreadyGI.pdf

January 13th, 2008 at 9:04 pm

This is really good,ambient occlusion is one of the most important thing for realistic lighting.Do you thing is possible make it a node,with arbitrary direction?

I want use is as a big area light source,but a direction vector is needed(i was able some time ago to directional ao with node setup,but the result was too much noisy).

Btw,thanks.

January 14th, 2008 at 3:27 pm

very interesting!

that makes AO practical for animations!

Thanks!

January 14th, 2008 at 4:04 pm

Jarod – If you can´t wait – BAKING your AO would be a good way to go, then just run the rest of the scene without AO as the AO will be baked into your scenes textures ;) Saves a HEAP of time, works like a charm.

January 14th, 2008 at 5:47 pm

Wow! Especially the one bounce indirect lighting has been on top of my wish list a long long time! It’s great that you’ve been hired to work on Blender full time now! Keep up the great work. Things like these lift Blender above the crowd!

January 15th, 2008 at 7:51 pm

:) It’s kind funny; AO it’s already a fast way of global ilumination and now it´s even faster, noise free and with one bounce of I.L. ^_^ Great work

January 15th, 2008 at 11:09 pm

Yay! Thanks Brecht!

January 17th, 2008 at 2:11 pm

SVN !! SVN !!

i’m getting mad waiting for it :)

January 17th, 2008 at 9:14 pm

Yep ! SVN !!!! I love the idea to use AO in my future video :) , thanks ! ;)

January 21st, 2008 at 11:05 pm

WOOOOOW just tested it !!!

http://www.graphicall.org/builds/builds/showbuild.php?action=show&id=560

now that’s FAST and quality !!

WOOOOOOW

gr8 JOB !!

January 22nd, 2008 at 6:44 am

Excellent!!!.

But Approximate Ambient Occlusion Not working in render passes :(

January 22nd, 2008 at 7:37 am

in today SVN working with render passes

January 23rd, 2008 at 6:30 am

Yeeeesss! Now its working with Vectorblur. By the way … this implementation of Approximate Occlusion bombs Blender in the range of Renderman on this special subject. Now add Approx Gi and Blender will noticed by the industry (especially the Matte Painting departments). I “feel” that some BIG Artists watching the progress.

Ah yes … don’t forget micro polygon rendering ;o)

January 23rd, 2008 at 11:30 pm

I just did a quick test on the lipsync shot from the new ManCandy FAQ DVD — I’m really impressed with the quality and super render speed!

Great job Brecht!

http://www.renderplace.com/blenderfiles/ManCandy_AAO_vs_AO.png

-AntonG

January 24th, 2008 at 9:28 am

single bounce.Here I COME

February 3rd, 2008 at 1:42 am

please that is so cool

February 7th, 2008 at 9:31 am

As you seem to be evaluating methods, have a look at how Pixar exploits temporal coherence in global illumination.

http://graphics.pixar.com/ShotRendering/paper.pdf

March 18th, 2008 at 11:27 am

howdy! and NEAT! i’m kinda fuzzy on the details now but didn’t ilm use this technique (including references to gpu gems and pixar) to “downsample” rendering on super highdensity (zbrush detailed) meshes when the detail occupied less than a pixel? is it possible to use your blender implementation to do the same kind of render optimization for things like displacement mapped, hires geometry? … dang, gotta dig out that cinefx article…or was it on the net… dang….

March 18th, 2008 at 11:30 am

http://features.cgsociety.org/story_custom.php?story_id=3889

oooohhhhhh…. i think it’s the same thing.

neeeeeeeaaaaaaat….

jin

July 1st, 2008 at 4:53 pm

Nice blog….good texts! Thanks